The Bunny That Broke the Transformer

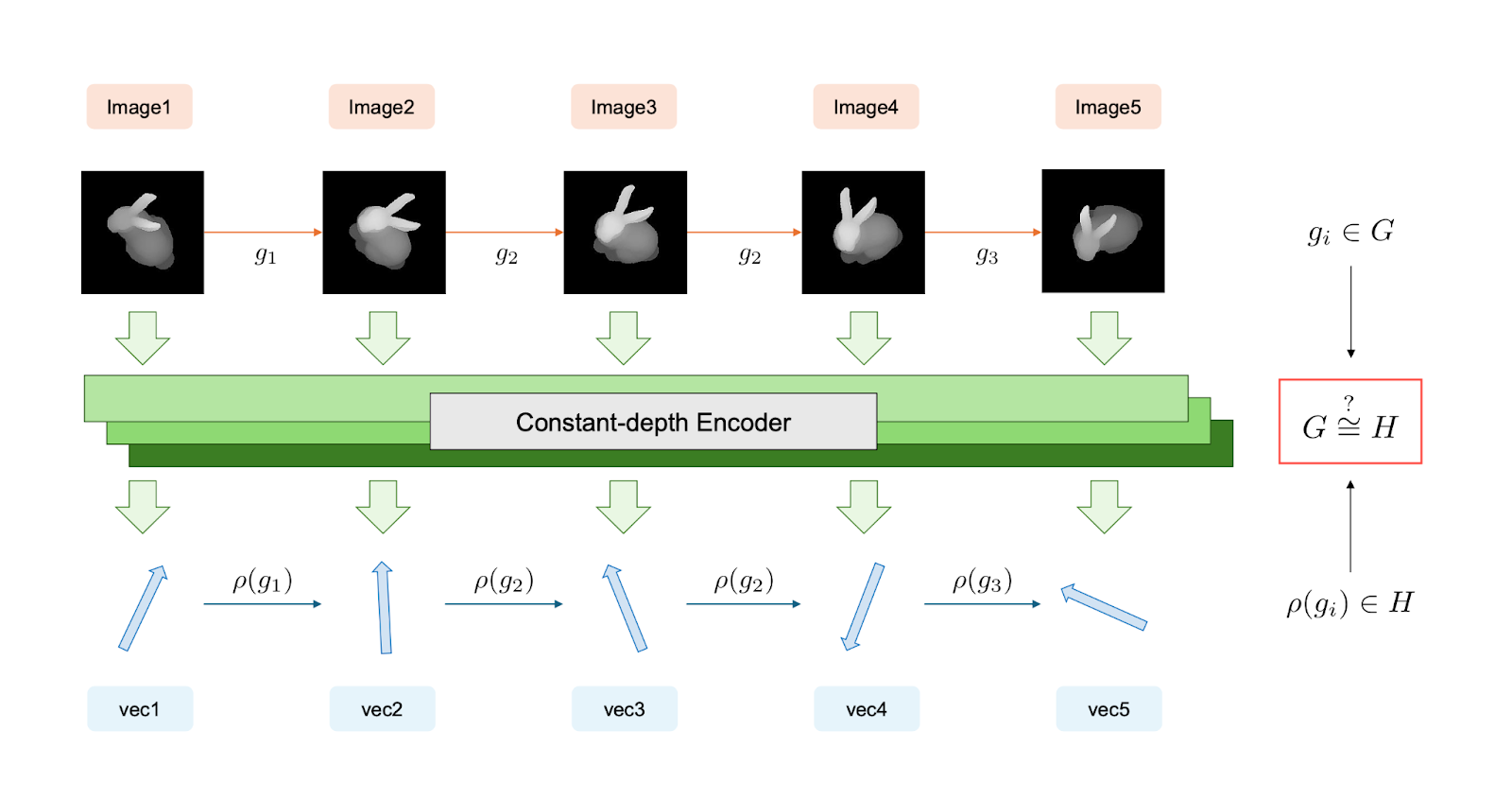

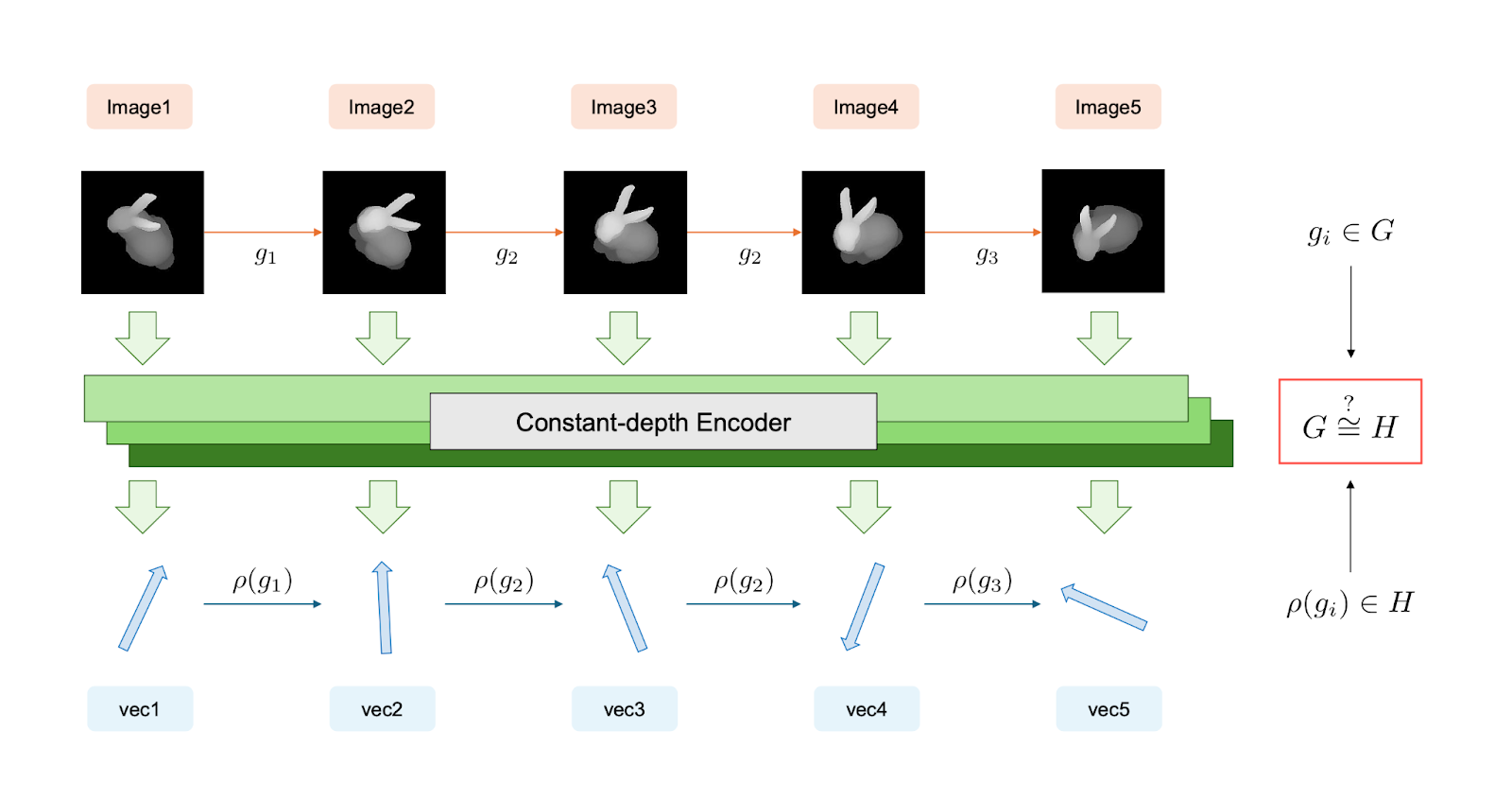

Three researchers in Nanjing proved that Vision Transformers can't learn 3D spatial reasoning. The ceiling isn't data or training or scale. It's the architecture itself.

Three researchers in Nanjing proved that Vision Transformers can't learn 3D spatial reasoning. The ceiling isn't data or training or scale. It's the architecture itself.

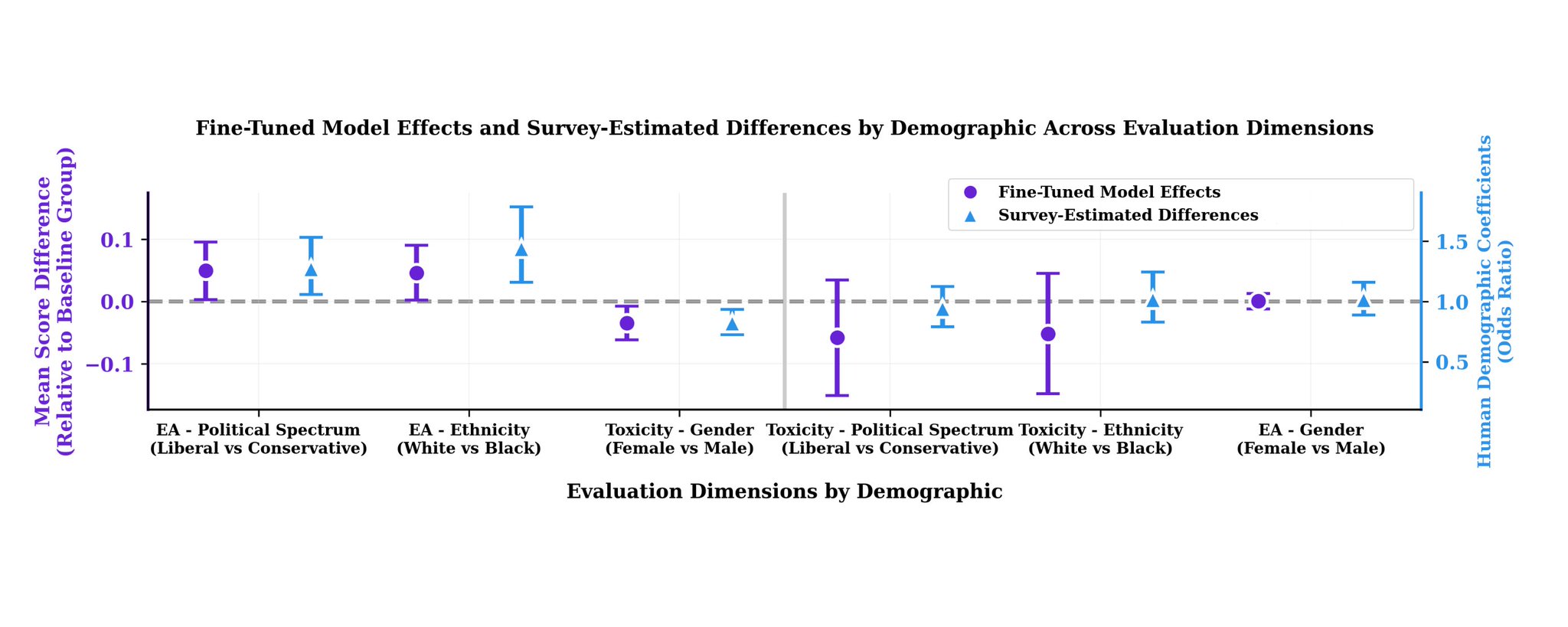

1,095 people rated AI safety. They couldn't agree. Keeping disagreement in training data beats throwing it away by 53%.

An independent researcher built a framework where AI hallucinations aren't minimized but mathematically impossible. Evidence on a sphere. Contradiction collapses the region. Refusal becomes geometric necessity.

A new paper argues that flat error curves across resolutions aren't generalization. They're a frequency ceiling nobody was looking for.

Current LLM training frameworks pick one parallel strategy and pray. ParaDySe hot-switches strategies layer-by-layer based on actual input length.

Researchers in South Korea weaponized prompt injection for defense. The more capable the AI attacker, the stronger the kill switch.